CPU-friendly Code

O-notation isn't everything

Hello, World

- These slides are here: is.gd/cpufriend

- Borislav aka Bobi aka iboB

- Mostly a C++ programmer

- Mostly a game programmer

- I also do open source

Optimizations

This talk is to a great extent about those 3%

This talk is about fundamental principles and not about algorithms

Why CPU and not GPU?

- The GPU is crazy-mega-powerful for some problems.

- Blah-blah architectural differences

- The GPU is a bad choice for these problems:

- Few data: PCI-bus overhead

- Non-parallelizable algorithms

- Sometimes we're GPU-bound

- Still some things apply equaly for GPU-s

Add more hardware!

We'll focus on low-level concepts and won't talk about multi-threading.

We will talk about hardware... a least a bit

O-notation isn't everything

O-notation

- An asymptotic evaluation of the complexity of an algorithm

- Very useful and widely used

- Θ-notation, Ω-notation, etc

- We evaluate the growth of the complexity based on the growth of the input

- Little-letter notation (о, ω) - too conservative

- The real complexity still exists

Example: О(1)

Take the sixth element from a container.

std::vector<int> v;

...

int sixth = v[6]; // !Theoretical real complexity: 1

std::list<int> l;

...

auto isixth = l.begin();

for(int i=0; i<6; ++i) ++isixth;

int sixth = *isixth; // !Theoretical real complexity: 6

Example: О(n)

Sum the elements from a list.

int sum = 0;

for(auto elem : container) sum += elem;Theoretical real complexity: n

point3 sum = {0, 0, 0};

for (const auto& elem : container) {

sum.x += elem.x;

sum.y += elem.y;

sum.z += elem.z;

}Theoretical real complexity: 3n

Hacking O-notation

Constant complexity: 1000.

- Linear for less than a 1000 elements is faster

- Quadratc for less than 32 is faster

- Cubic for less than 10 is faster

Linear complexity: 100n.

- Quadratic for less than 100 is faster

- Cubic for less than 10 is faster

...

It's useful to have an idea of the real complexity and the size of the input

Demo 1 and 2

Modern hardware makes the real complexity

pratically impossible to calculate

But nevertheless...

Cache Locality

Cache Locality

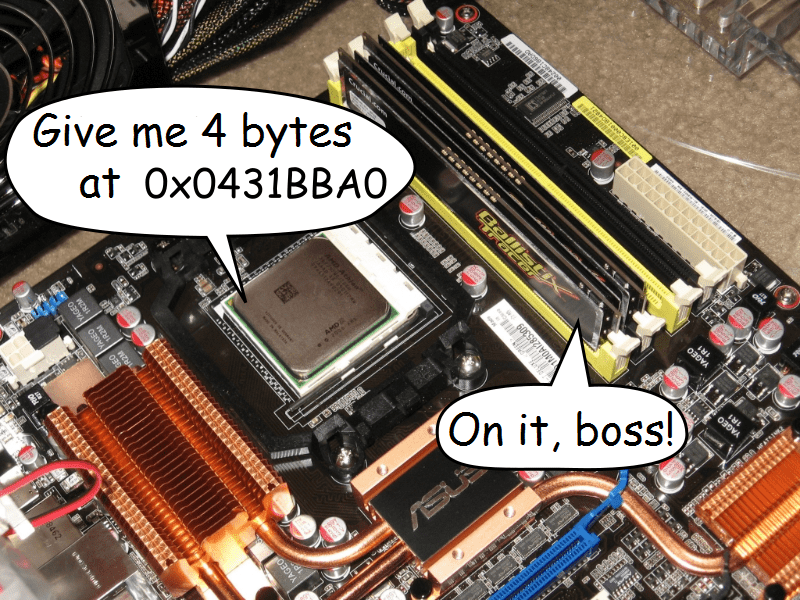

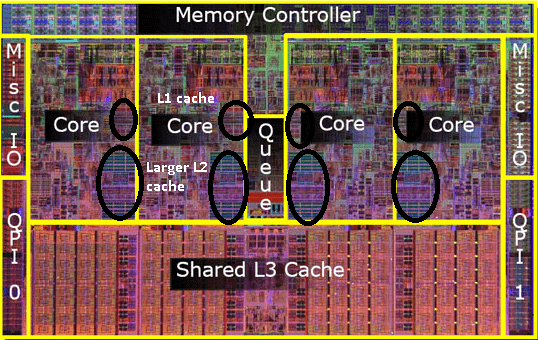

Cache ($)

Cache is memory close to the CPU

Cache levels - the closer, the faster

RAM (→ L4?) → L3 → L2 → L1

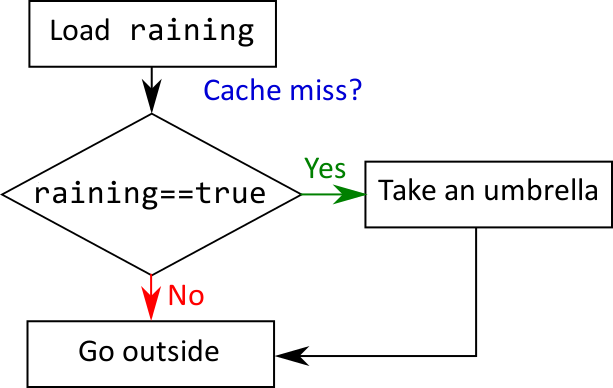

How does this work?

- The data is in the cache - cache hit

- It it's not - cache miss

- L1: ~4 cycles

- L2: ~10 cycles

- L3: ~20 cycles

- RAM: ~100 cycles

Responding to "Give me data on address X!" leads to potentially different strategies. For example:

4KB to L3 → 256B to L2 → 64B to L1

How much does this cost?

What does this mean?

- Fast stuff:

- Value types / PODs

- Contiguous memory

- Slow stuff:

- Indirections (pointers)

- This includes dynamic polymorphism

- Array-of-structs vs Struct-of-arrays

Demo 3 and 1'

Types of cache

- Data - Our data. What the examples were about

- Instruction - The code of the program. A demo is hard :(

- TLB - for virtual memory. We don't have control over this

- All of these are L1. L2+ are mixed

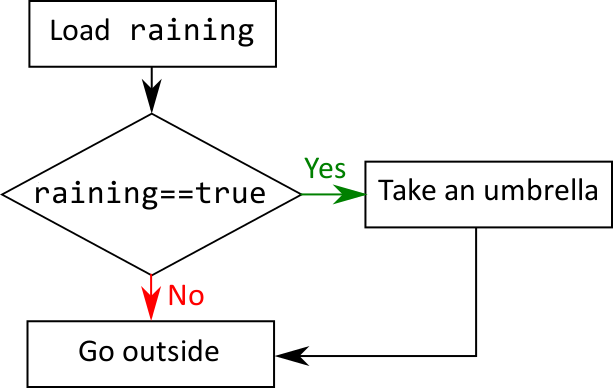

Branch prediction

Branch

Well... litearlly a branch in the program

Branch

Well... litearlly a branch in the program

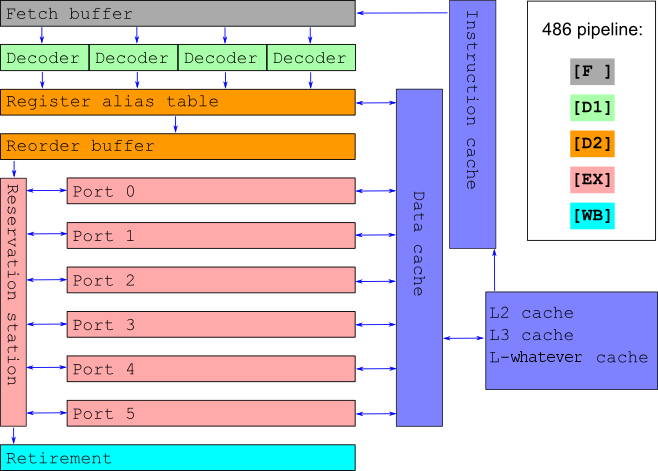

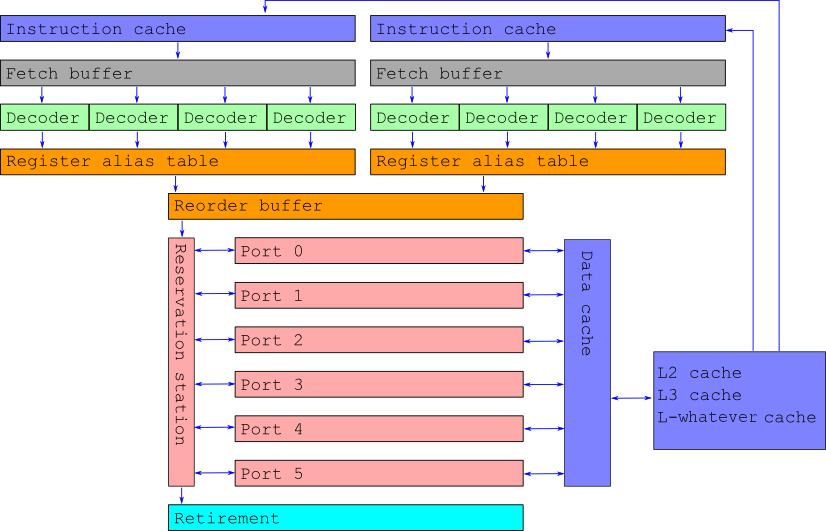

CPU ОоО Pipeline

Waiting is expensive

Branch predictors

- Branch @ 0x12300 :

y,n,n,y,y,y,y,y - Loop predictors - this is a thing

__builtin_expect- borderline pointless- If we have no clue, execute both branches

Speculative execution: The execution of code, which possibly shouldn't be executed

Demo 4

Conclusions

if-s are not that scary anymore- Dense is better than sparse

Syscalls and allocations

Especially allocations!

Hammer time

Syscall

We leave the reaches of our program and boldly go into the world of the operating system

Syscall examples

- Not functions like

sin, cos, qsort - I/O (input and output)

- Managing threads and processes

- Driver calls like OpenGL

- Allocations and deallocations

Allocations

- Obvious cost

- The OS has to do a bunch of stuff.

- Physical memory: pages

- Free chunk of consecutive pages

- Sync between threads and processes

- Commit size - it's very helpful

- Not-so-obvious cost

- Zeroing/clearing of memory

- Fragmentation

- Every allocation is a syscall

Demo 5

What can we do?

- Object and memory pools

- Why is Java faster than C++?

- Reusing objects

- Code it like it's 1969. Fixed size arrays

- Reserve (for example

std::vector::reserve)

Demo 5'

What does this cost?

- More memory

- Code which is more complicated and harder to read

- Longer loading time

- malloc-lite: locally solve the problems which the OS is trying to solve

- Luckily in 90% ot the cases we can go away with 10% of the effort.

Intrinsics

Instruction set

- What can our CPU do?

- Execute instructions

- Not all instructions are accessible through С or С++

- For example

rdtsc - or

wbinvd - Most compilers allow us to access them through intrinsics

- gcc and clang:

__builtin_??? - msvc:

_???, _m_??, _mm_??

Demo 6

Drawbacks

- Compiler-specific

- Architecture-specific

#ifdefto the rescue

It's worth goign through them. Maybe you'll have a revelation

SIMD and beyond

Single Instruction Multiple Data

- x86

- MMX, SSE, SSE2, SSE3, SSE4, SSE5, AVX, FMA, AVX2, AVX-512

- ARM

- NEON, ARMv8

- Accessed through intrinsics (or... you know Assembly)

- Mind the alignment

- Contemporary compilers are powerful!

Beyond

- We just scratched the surface

- To make good use of the hardware, you must know it

- Read more disassembly

- Always benchmark

- ???

- Profit

Bonus: Hyperthreading

Bonus: Modern Vulnerabilities

char* memory = new char[0x200];

if (something_false) {

char value = *(char*)(KERNEL_MEM_PTR);

int index = (value & 1) * 0x100;

puts(memory[index]);

}

measure_time_to_access(memory[0]);

measure_time_to_access(memory[0x100]);

End!

Questions?

Borislav Stanimirov / ibob.github.io / @stanimirovb

The demos are here: https://github.com/iboB/cfc-demos

These slides are here: https://ibob.github.io/slides/cpu-friendly-code-2/

Slides license Creative Commons By 3.0